On the internet, where large volumes of data are transferred between a source and a destination, latency is a key concept. Latency is simply put the time it takes for information to travel from a source to the destination and back. For example, if you are requesting a page of a media asset via a web browser, latency can be thought of as the time delay between your input and the output that you get on your browser page. Latency is measured using either Round trip time (RTT) or Time to first byte (TTFB).

The data or media asset in question here can be video or more commonly today, video streaming. Low latency streaming refers to streaming technology that minimizes this latency or time delay for a video stream from the source, usually a camera to an end user’s screen. The exact classification of what constitutes “low latency” is subjective but in general, this delay is to be kept below five seconds for it to qualify as low latency streaming.

Think of the times when you have watched a Netflix show or caught a high-stakes sporting event on live stream. If you experience more latency compared to your friend you are chatting with on a messaging tool, you might hear their celebration or excitement a few seconds before you experience the actual event on your screen.

If you are a business that relies on delivering such video streaming content or streaming of any media for that matter, you will need to avoid such delays. And to do that you will need to adopt low latency streaming technologies.

What Affects Network Latency?

There are a few reasons that can contribute to network latency. These include:

Nature of Transmission

How the data is transmitted across the internet can influence the latency in a big way. For example, the transmission medium could be through optic fibre, WAN, Wi-Fi or other cables. These have different transmission rates and can therefore affect the speed at which the information is delivered.

Protocols and Formats

The streaming protocols and encoding formats involved can also affect the latency of the stream. The most common low-latency streaming use cases today adopt the HLS protocol. This is expected to achieve latency of below two seconds and is ideal for scaling interactive streaming. DASH is another similar protocol that achieves super-fast video delivery at scale.

WebRTC is another protocol that is growing in popularity, due to the fact that it is browser-based. It supports interactivity at 500-millisecond latency, but can be difficult to scale.

Router

Routers are meant to connect multiple devices to a common Internet Service Provider (ISP). They essentially act as intermediary devices which take the information from the outside internet and dispatches it to and from individual end-user devices. In the process they may also add additional information and analyze the header of the packets of data transmitted, which triggers extra latency.

Bandwidth

Bandwidth refers to the amount of data that can be transferred per second. The higher the bandwidth for a network the faster the streaming will be and the lesser the latency.

Distance

For any data transmitted over the internet, the distance that it has to travel is a major factor that affects latency. The longer this distance is, the more time it takes for the data packets to travel to and from each party and the bigger the latency. For example, if the source of your streaming is in New York and some of your viewers are located in Asia, they will experience greater latency, simply because of the extra distance the data has to travel.

When is Low Latency Essential?

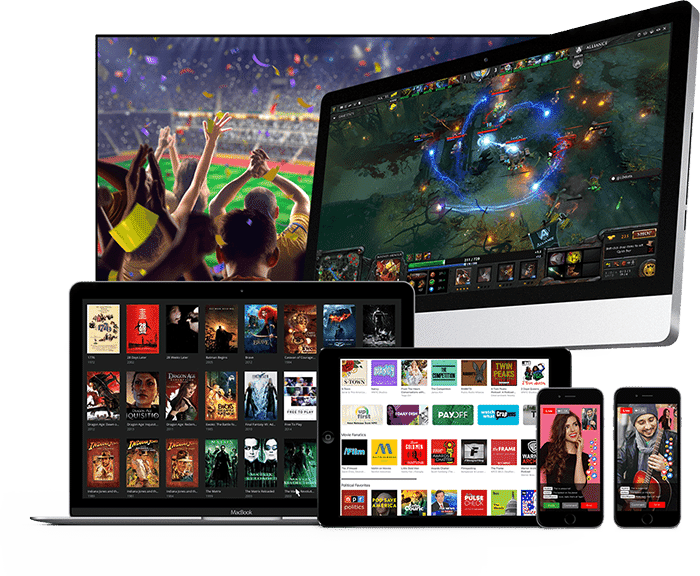

For normal video streaming on demand, such as on-demand movies or music consumption, or broadcast of pre-recorded videos, latency may not be a critical issue. But for live events or real-time communication, any latency can be problematic. This is when low latency technologies become desirable. Think of virtual events, meetings and conferences, webinars, online classes, and interactive sessions. All of these depend on real-time video transmission with as little latency as possible.

For some use cases, low latency also becomes a necessity, and even a competitive selling point for businesses to stay ahead of the market. Here are some of these scenarios:

Online Gaming

Online gaming is a booming industry. According to Statista, the online gaming market grew to a record 21.9 percent growth in 2020 compared to the previous year. It is an industry which relies on streaming content with minimal latency to allow users to engage and interact in real time. Think of games where users need to aim at moving targets and shoot to move ahead, or multiplayer games which rely on frequent discussions between participants. Timing being critical for most of these games, the desired latency can go down to below 100 milliseconds.

Live Streaming

Just like gaming, businesses based on live streaming content also saw a rise in demand during COVID. Live video sessions in the areas of meditation, fitness, counselling, educational sessions on cooking and other entertainment platforms based on video have become hugely popular. Think of Peloton classes, Twitch streaming, watch parties during global sporting events and the like, which involve live video streaming combined with real-time two-way data exchange with participants.

Live Auction

Another scenario when low latency is absolutely essential is live auction. These often revolve around sporting events and betting activities, where participants require quick real-time communication. The high stakes and financial consequences associated with these auctions demand ultra low latency streaming to ensure that everyone is on a level playing field and that no one misses out due to delays. Financial trading platforms are a related example, with delays of fractions of a second leading to differences in billions of dollars.

Video Chatting

It is a given that regular human communication through video platforms today needs real-time streaming with minimal delays. Professional video conferences, office meetings, and social catchups depend on fluid, uninterrupted communication. Delays, audio lags and video freezing can lead to unpleasant experiences and affect productivity and morale of teams.

How CDNetworks Can Help You Achieve Low Latency Streaming

Addressing the factors outlined above that affect network latency is a must for achieving low latency streaming. Out of these, the adoption of CDN can make a big difference, as it brings resources closer to end users by caching data in geographically distributed edge servers or Points of Presence (PoP). Once cached, the users’ requests will be sent to the nearest Point of Presence to retrieve the required data. CDNetworks provides ultra low latency streaming experiences with bandwidth scalability, multi-protocol support, access control and many other features. To see how it can help your business stream high quality video content without delays, try the solution for free today.